August 12, 2019

Flathub, brought to you by…

Over the past 2 years Flathub has evolved from a wild idea at a hackfest to a community of app developers and publishers making over 600 apps available to end-users on dozens of Linux-based OSes. We couldn’t have gotten anything off the ground without the support of the 20 or so generous souls who backed our initial fundraising, and to make the service a reality since then we’ve relied on on the contributions of dozens of individuals and organisations such as Codethink, Endless, GNOME, KDE and Red Hat. But for our day to day operations, we depend on the continuous support and generosity of a few companies who provide the services and resources that Flathub uses 24/7 to build and deliver all of these apps. This post is about saying thank you to those companies!

Running the infrastructure

Mythic Beasts is a UK-based “no-nonsense” hosting provider who provide managed and un-managed co-location, dedicated servers, VPS and shared hosting. They are also conveniently based in Cambridge where I live, and very nice people to have a coffee or beer with, particularly if you enjoy talking about IPv6 and how many web services you can run on a rack full of Raspberry Pis. The “heart” of Flathub is a physical machine donated by them which originally ran everything in separate VMs – buildbot, frontend, repo master – and they have subsequently increased their donation with several VMs hosted elsewhere within their network. We also benefit from huge amounts of free bandwidth, backup/storage, monitoring, management and their expertise and advice at scaling up the service.

Starting with everything running on one box in 2017 we quickly ran into scaling bottlenecks as traffic started to pick up. With Mythic’s advice and a healthy donation of 100s of GB / month more of bandwidth, we set up two caching frontend servers running in virtual machines in two different London data centres to cache the commonly-accessed objects, shift the load away from the master server, and take advantage of the physical redundancy offered by the Mythic network.

As load increased and we brought a CDN online to bring the content closer to the user, we also moved the Buildbot (and it’s associated Postgres database) to a VM hosted at Mythic in order to offload as much IO bandwidth from the repo server, to keep up sustained HTTP throughput during update operations. This helped significantly but we are in discussions with them about a yet larger box with a mixture of disks and SSDs to handle the concurrent read and write load that we need.

Even after all of these changes, we keep the repo master on one, big, physical machine with directly attached storage because repo update and delta computations are hugely IO intensive operations, and our OSTree repos contain over 9 million inodes which get accessed randomly during this process. We also have a physical HSM (a YubiKey) which stores the GPG repo signing key for Flathub, and it’s really hard to plug a USB key into a cloud instance, and know where it is and that it’s physically secure.

Building the apps

Our first build workers were under Alex’s desk, in Christian’s garage, and a VM donated by Scaleway for our first year. We still have several ARM workers donated by Codethink, but at the start of 2018 it became pretty clear within a few months that we were not going to keep up with the growing pace of builds without some more serious iron behind the Buildbot. We also wanted to be able to offer PR and test builds, beta builds, etc — all of which multiplies the workload significantly.

Thanks to an introduction by the most excellent Jorge Castro and the approval and support of the Linux Foundation’s CNCF Infrastructure Lab, we were able to get access to an “all expenses paid” account at Packet. Packet is a “bare metal” cloud provider — like AWS except you get entire boxes and dedicated switch ports etc to yourself – at a handful of main datacenters around the world with a full range of server, storage and networking equipment, and a larger number of edge facilities for distribution/processing closer to the users. They have an API and a magical provisioning system which means that at the click of a button or one method call you can bring up all manner of machines, configure networking and storage, etc. Packet is clearly a service built by engineers for engineers – they are smart, easy to get hold of on e-mail and chat, share their roadmap publicly and set priorities based on user feedback.

We currently have 4 Huge Boxes (2 Intel, 2 ARM) from Packet which do the majority of the heavy lifting when it comes to building everything that is uploaded, and also use a few other machines there for auxiliary tasks such as caching source downloads and receiving our streamed logs from the CDN. We also used their flexibility to temporarily set up a whole separate test infrastructure (a repo, buildbot, worker and frontend on one box) while we were prototyping recent changes to the Buildbot.

A special thanks to Ed Vielmetti at Packet who has patiently supported our requests for lots of 32-bit compatible ARM machines, and for his support of other Linux desktop projects such as GNOME and the Freedesktop SDK who also benefit hugely from Packet’s resources for build and CI.

Delivering the data

Even with two redundant / load-balancing front end servers and huge amounts of bandwidth, OSTree repos have so many files that if those servers are too far away from the end users, the latency and round trips cause a serious problem with throughput. In the end you can’t distribute something like Flathub from a single physical location – you need to get closer to the users. Fortunately the OSTree repo format is very efficient to distribute via a CDN, as almost all files in the repository are immutable.

After a very speedy response to a plea for help on Twitter, Fastly – one of the world’s leading CDNs – generously agreed to donate free use of their CDN service to support Flathub. All traffic to the dl.flathub.org domain is served through the CDN, and automatically gets cached at dozens of points of presence around the world. Their service is frankly really really cool – the configuration and stats are reallly powerful, unlike any other CDN service I’ve used. Our configuration allows us to collect custom logs which we use to generate our Flathub stats, and to define edge logic in Varnish’s VCL which we use to allow larger files to stream to the end user while they are still being downloaded by the edge node, improving throughput. We also use their API to purge the summary file from their caches worldwide each time the repository updates, so that it can stay cached for longer between updates.

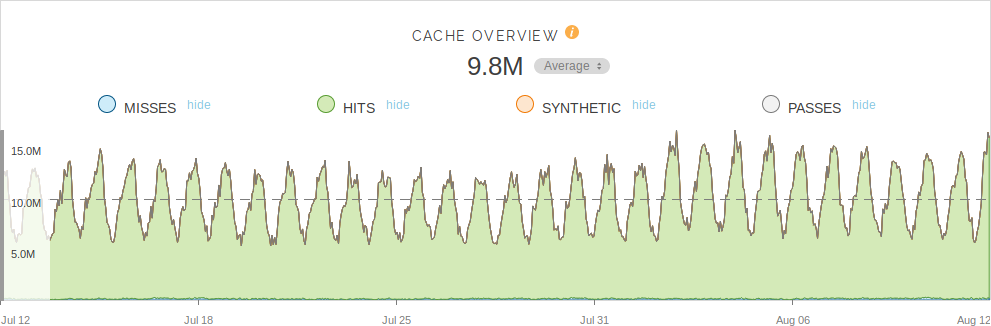

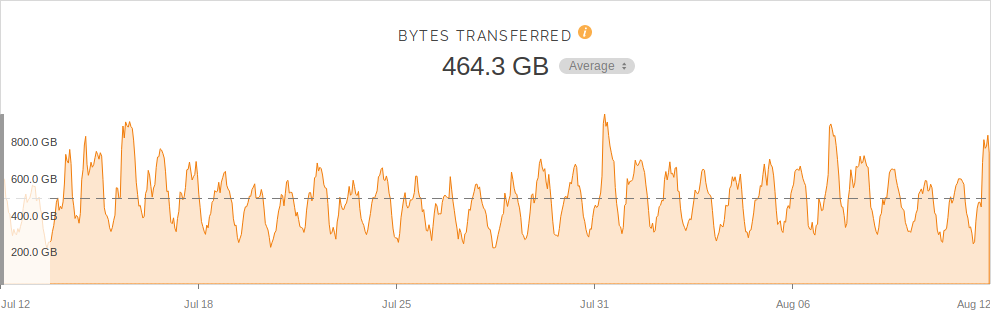

To get some feelings for how well this works, here are some statistics: The Flathub main repo is 929 GB, of which 73 GB are static deltas and 1.9 GB of screenshots. It contains 7280 refs for 640 apps (plus runtimes and extensions) over 4 architectures. Fastly is serving the dl.flathub.org domain fully cached, with a cache hit rate of ~98.7%. Averaging 9.8 million hits and 464 Gb downloaded per hour, Flathub uses between 1-2 Gbps sustained bandwidth depending on the time of day. Here are some nice graphs produced by the Fastly management UI (the numbers are per-hour over the last month):

To buy the scale of services and support that Flathub receives from our commercial sponsors would cost tens if not hundreds of thousands of dollars a month. Flathub could not exist without Mythic Beasts, Packet and Fastly‘s support of the free and open source Linux desktop. Thank you!

Calendar

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 | |

Links

Archives

- April 2024

- February 2024

- March 2023

- November 2022

- May 2022

- February 2022

- June 2021

- January 2021

- August 2019

- October 2018

- July 2017

- May 2010

- October 2009

- August 2009

- July 2009

- March 2009

- January 2009

- July 2008

- June 2008

- April 2008

- May 2007

- January 2007

- December 2006

- June 2006

- April 2006

- March 2006

- November 2005

- October 2005

- September 2005

- August 2005

- July 2005

- May 2005

- April 2005

- March 2005